- AI models have managed to create images that already seem indistinguishable from reality

- AI-generated image detection systems don’t help, but exacerbate the problem

- And that can make us stop believing even legitimate images and videos

Deepfakes, the fake images generated by generative AI models, are becoming more and more convincing. We were made to think that perhaps the Pope was dressed in a very modern anorak or that Donald Trump was forcibly arrested. We must be more and more attentive to the images we see on social networks, but not only those that are fake, but also those that are true.

Chasing disinformation. Elliot Higgins is an analyst at OSINT (Open Source Intelligence). A few years ago he began to gather his discoveries on a website called Bellingcat, and since then his agency has become a benchmark in this field. He investigates all kinds of events, and in recent times he knows that the current challenge in the field of disinformation is posed by artificial intelligence.

Deceiving both sides. As he stated in a recent interview with Wired, “When people think of AI, they think, ‘Oh, it’s going to trick people into believing things that aren’t true.’ But what he’s really doing is giving people permission not to believe things that are true. In other words, just the opposite.

We no longer even trust what is true. With so much misinformation, deepfakes and AI can also end up making us not believe what we are seeing and think that “ah, it’s another image generated by AI” when in reality it is an image of and a legitimate situation.

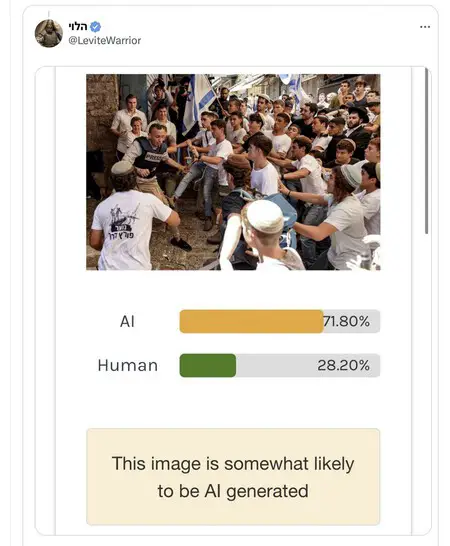

Real or artificial? He demonstrated this recently by republishing a singular image. A website that theoretically detects AI-generated images claimed that the photo of the journalist besieged by dozens of people was likely generated by artificial intelligence. But the problem is that the image was real (there are others of that event), so that detection service failed.

The danger of machines identifying photos. These photo detection systems condition us because we can trust their conclusions. This is what a photographer who sends her photos to Instagram and who is seeing how conventional photos that she sends to the service are detected as generated by AI.

But we can also make mistakes. It doesn’t seem that automatic detection systems work in these cases, but our eyes can also deceive us: the evolution of platforms such as Midjourney has shown that the photorealism that these generative AI models can achieve is amazing, and it is increasingly difficult to distinguish fact from fiction.

And wait, we have the video. In reality, images are only part of the problem: the generation of deepfakes of people who say coherent things, move their lips coherently when they say them, and who seem indeed legitimate are being perfected. If the video is false but reasonable, we may believe it. And that will mean that even when the video is real, we may not believe it.

Image: Almal3003.01 with Midjourney

Related: How AI Helps Cat Owners Detect When They’re in Pain

Comments