- Why Elon Musk considers Apple devices to be unsafe in his companies

- AI has advanced so much that the problem is not just deepfakes. It’s just that we distrust even real photos

OpenAI has taken a major step forward in artificial intelligence research by discovering a way to better understand how its ‘language models’ (the type of AI in which GPT-4 is included, for example) work. This task has always been very complicated due to the complexity of these systems, which until now have been like a black box whose interior was impossible to observe… even for the creators of these AIs themselves.

While engineers can design, evaluate, and repair cars based on the specifications of their components, neural networks are not designed directly: instead, the algorithms that train them are designed, resulting in networks that are not fully understood and cannot be easily broken down into identifiable parts.

This, of course, complicates the task of reasoning about the safety of AI in the same way that one might do about the safety of a car.

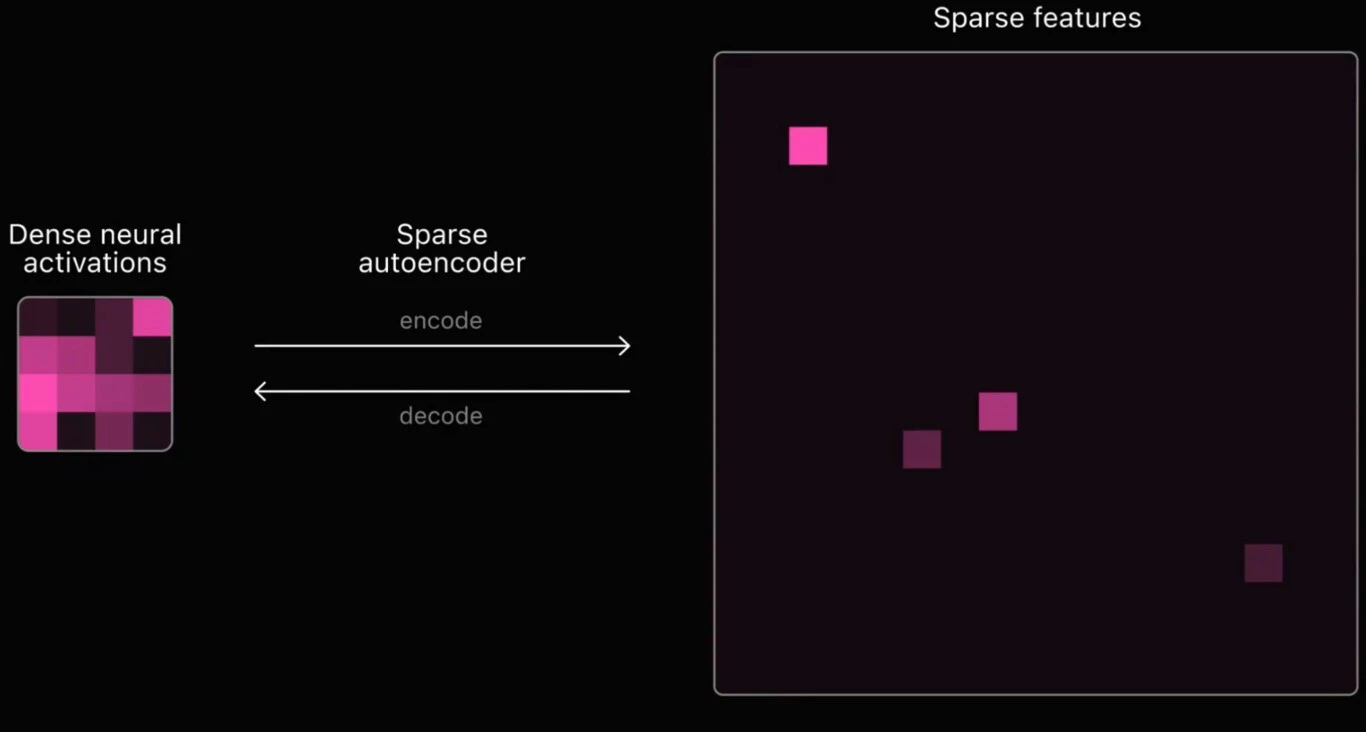

To better understand AI, OpenAI scientists are looking for “features” or patterns within the model that can be more easily interpreted. It’s like trying to identify specific parts within a very complex machine.

OpenAI focuses on ‘dispersed’ technology

OpenAI has developed new techniques to find these characteristics within its AI models. They have managed to identify 16 million of these patterns in GPT-4, which is a major breakthrough. To check if these patterns are understandable, they have shown examples of texts where these patterns are activated.

To do this, OpenAI has opted for the use of dispersed autoencoders, a technique for identifying a handful of important “characteristics” to produce a given output, similar to the small set of concepts that a person might have in mind when reasoning about a situation.

Thus, for example, they found specific patterns that are activated by themes such as “Human Imperfection”, “Price Increases” and “Rhetorical Questions”… It’s like finding specific pieces of information that we can recognize and understand.

And now, OpenAI has developed new methodologies that allow it to scale its dispersed autoencoders to tens of millions of features in advanced AI models. The company hopes that the first results of this technology can be used to monitor and adjust the behavior of its cutting-edge models.

Importantly, OpenAI isn’t alone in this effort: companies like Anthropic are also working on pushing dispersed autoencoders.

However, despite promising advances, the application of this technology is still in its early stages: many of the patterns found are still difficult to interpret and do not always work consistently; moreover, the process of breaking down AI into these patterns doesn’t capture all of its behavior, which means there’s still a lot to discover.

“To fully map concepts in advanced language models, it may be necessary to scale to billions or trillions of features, which presents a considerable challenge, even with the improved techniques.”

Track | OpenAI

Comments